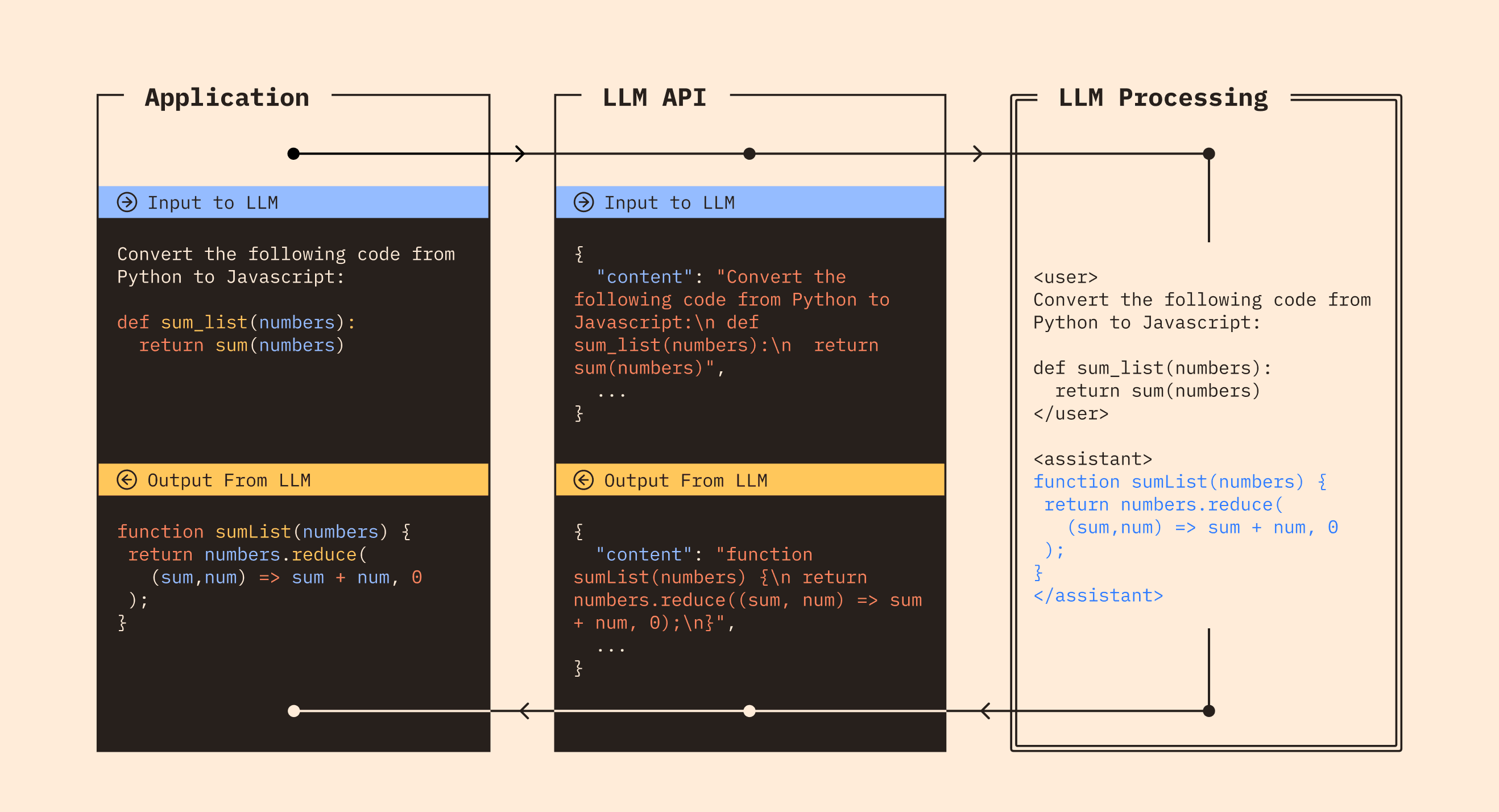

Integrating LLMs into software applications is as simple as calling an API. While the specifics of the API may vary between LLMs, most have converged on some common patterns.

Behind the scenes, the LLM is still just extrapolating from text. The API call is unpacked into raw text, which is then processed by the model. Likewise, the model outputs raw text, which is then packed into a structured response.

Example Input

- Calls to the API typically consist of parameters including a

modelidentifier, and a list ofmessages. - Each

messagehas aroleandcontent. - The

systemrole can be thought of as the instructions to the model. - The

userrole can be thought of as the data to process.

The names of the roles might be a little confusing in an application context; they are a holdover from chatbots where the system role tells the chatbot how to behave, and the user role represents the user's input. Along with the model parameter, there are many other parameters which control the behavior of the model. We will cover thes use of these parameters in later sections.

The output has a similar structure:

Example Output

The assistant role indicates that the message is a response from the model. We will see the use of the assistant role in the next section.

Notebooks

The following notebooks demonstrate how to set system and user messages using LLMs from Google, OpenAI, and Anthropic. These links open in Google Colab, where you can run the code yourself.

Read next → AI is stateless

If AI Dev Explainer inspires you to build something cool → put it on Github, send us a link and we will send you this sticker pack.